A Learning Set was created and the Multichannel Segmentation architecture selected. Training was performed as follows:

•AWS p2.8xlarge, 8 K80 GPU, 64 GB memory

•No cropping

•200 samples (160 training, 40 validation)

•200 epochs (aborted)

•batch size 16

•learning rate 0.005

Training took 17.5 hours.

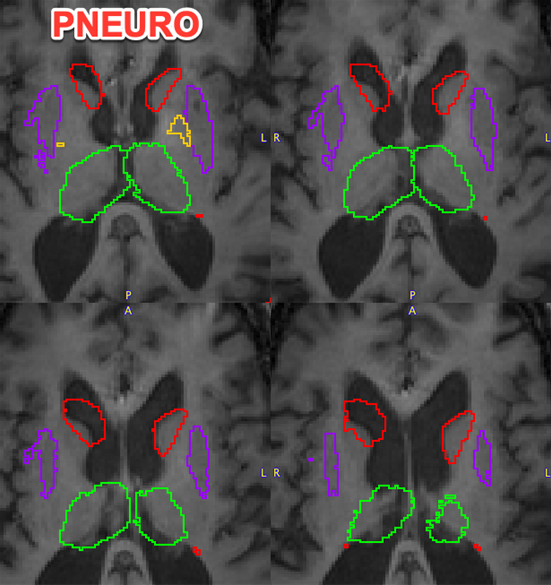

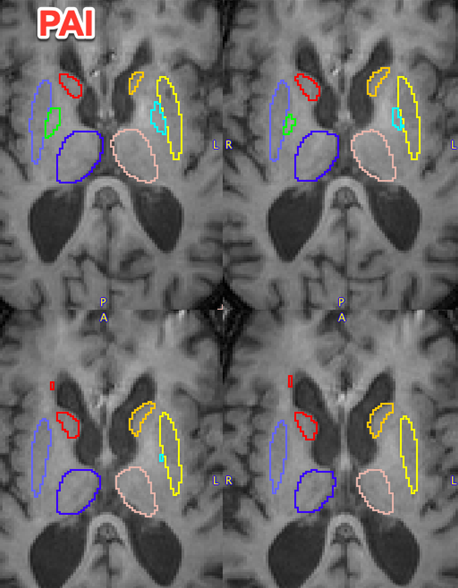

Prediction was tested on samples excluded from training due to failed segmentation in PNEURO. The trained model was less sensitive to atrophy and large variations in ventricular anatomy than PNEURO and yielded acceptable deep nuclei VOIs. The example below illustrates the problems that can be encountered in PNEURO due to severe atrophy and successful result generated by the trained model:

A small artefact can be noted anterior to the right caudate. This could be cleaned up using the manual VOI tools and may be removed following further training of the model with additional samples.

The model only returns VOIs in the original input image space, while in successful cases PNEURO allows spatial transformation between input and atlas space.

Example data to test the IXI Parcellation model is available in our Demo database (Subject PAI4). The data used for the case study was extracted from the IXI dataset: https://brain-development.org/ixi-dataset/

To try the model for yourself we recommend use of the Segment tool (PSEG). The PAI4 example has the required orientation and any new data used for testing should match this. Cropping is not strictly required but is recommended to reduce the field-of-view to the brain. The model selection is IXI Parcellation (Multichannel Segmentation). The model was trained with 3D data so Split Slices/Frames is not available/required. The data used in these cases was all T1-weighted 3D MR - the performance of the model may vary for data from different hardware and/or with different contrast/pre-processing.