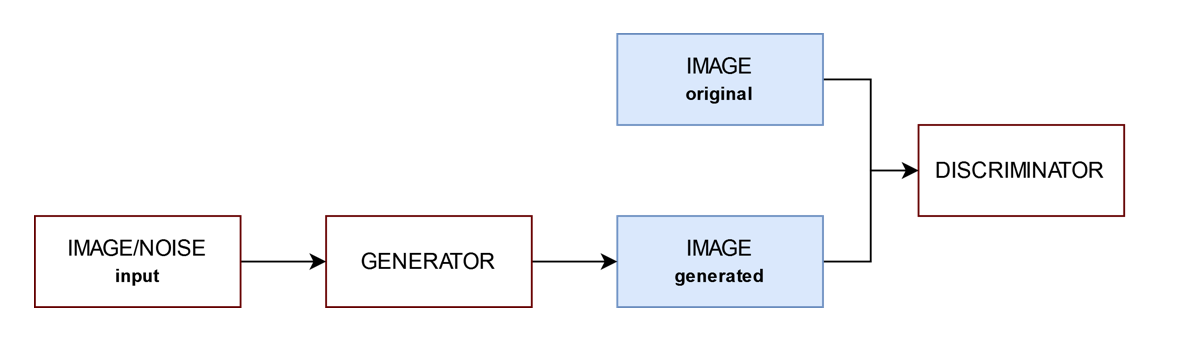

Generative adversarial networks, generally known as GAN, are used in image generation, often to generate an "improved" image from a given input. Examples include image denoising and creation of "super-resolution" images. GAN generally consist of a generator and a classifier (Discriminator). Both are trained separately. The role of the discriminator is to detect that the image provided from the generator is fake and the original image is real, but the role of the generator is to fool the discriminator by generating such good images that the discriminator classifies them as real. That is why they are called adversarial.

The first GAN available in PAI, StyleGAN2 (from noise), generates images from noise, so the input to the generator is a matrix of randomly generated noise of a fixed size (normal distribution, mean 0, variance 1). The second GAN available in PAI, Generate from uNet, is also known as Pix2Pix because a new image is generated from an existing one, for example ‘super-resolution’ images.

An important aspect is that the generator never sees the image it should generate, only the discriminator sees the original images.

Two GAN architectures are under development:

- Generate from uNet (the generator architecture is based on the classical uNet architecture)

- StyleGAN2 from noise (see Karras et al. [1])

These architectures are only designed to accept 2D data. Contact us for more information.

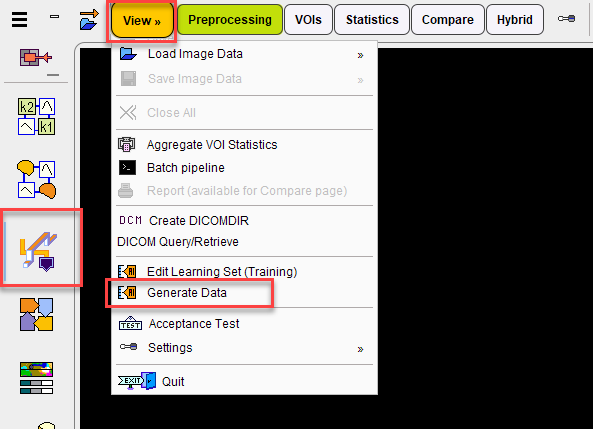

Once trained, GAN are available in the Generate Data tool available in the View menu:

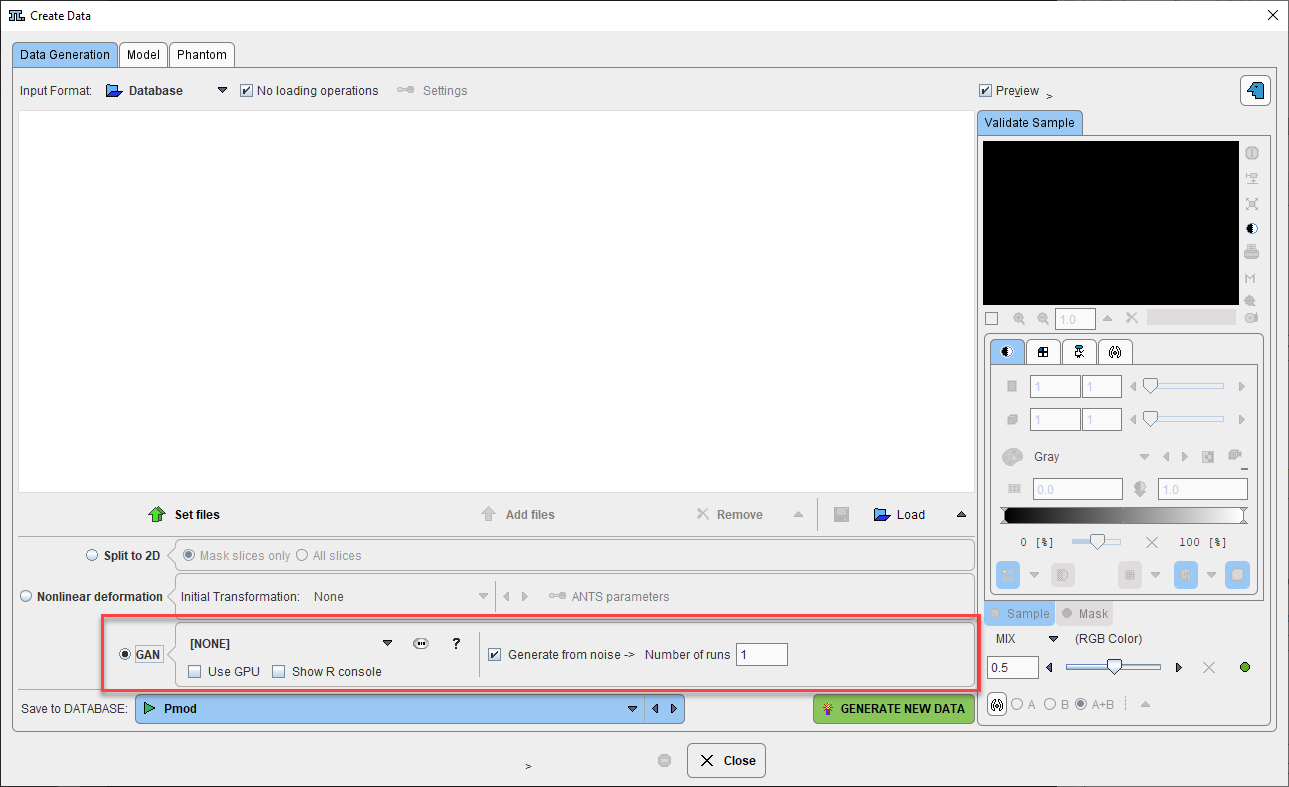

GAN accepts input images specified in the upper panel, or images can be generated from noise, depending on how the model was trained:

The generated images are saved to the Database specified.

References

[1] Karras et al., (2020) Analyzing and Improving the Image Quality of StyleGAN. https://arxiv.org/abs/1912.04958